How do you migrate to the cloud with little to now downtime of your applications? We unpack two strategies that work well

If you’ve been following this migration article series, you should be ready to move onto the next phase of your journey – getting to the good stuff and migrating your workloads.

Before we get there, let’s quickly recap of all the things you should have in place before you kick off your migration.

- You know what your security and compliance goals are, and what services you can (or can’t) use in your cloud architecture

- You have deployed a couple of tools that will make the migration journey just a little bit easier

- Remember to run a tool that will help you understand how to migrate in an optimised state. One such tool is the AWS Server Migration Service which will tell you how your current server is being utilised and what instance size you will require in AWS to run optimally

- You understand your Total Cost of Ownership (TCO)

- By running one of the earlier tools, you make it easy to understand what you will need to run your workloads, but most importantly, it will help you understand what your consumption costs will be. This helps you determine your TCO

- Cloud TCO is the total cost associated with your new cloud environment – often compared to the total cost of your former server or data centre deployment

- Both numbers are important not only after you migrate to the cloud (when you likely need to prove value and keep costs optimised) but also before you migrate (when they can help you make a business case for migration, fully understand your options, and set expectations for cost, return, and the timing of those costs and returns)

- It’s important to understand both TCO and Cloud TCO before you can determine the business case of migrating to the cloud, but TCO isn’t just made up of infrastructure cost and the operational costs to run and maintain that environment, you need to also be mindful of the benefits, agility, flexibility uptime, which in turn could result in a better customer / user experience and hopefully result in an increase in revenue for your organisation

- You know what you will be doing with each application during the migration and which of the 6 migration strategies you’ll follow for each application

- You’ve reviewed your operations and determined if any changes need to be made to effectively migrate to the cloud and manage the environment when you are there

- Shared Responsibility, this is key. You might have read about this before, go read it again 😊

- You must understand what your responsibility is when it comes to the ongoing operating of your environment

Okay, so you are now ready to kick off the migration, but how do you make it as easy as possible while ensuring there is very little to no downtime of your applications?

Jaco Venter, head of the cloud management at BBD says that while there are quite a few options which could help you make the AWS move to cloud a little easier, there are two strategies that he believes work very well.

CloudEndure

A tool from AWS, CloudEndure has 2 primary functions: Disaster Recovery and Migrations.

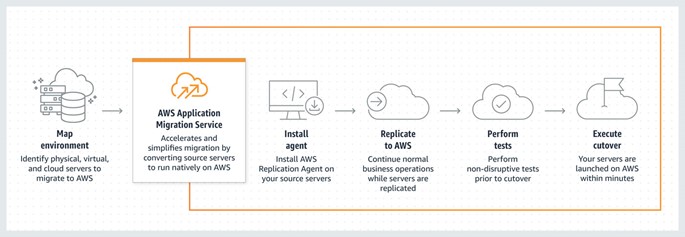

The migration component is called AWS Application Migration Service (AWS MGN).

Using a component such as AWS MGN allows you to maintain normal business operations throughout the replication process while you migrate to the cloud. Basically, because it continuously replicates source servers there is little to no performance impact on your operations during this process. Continuous replication also makes it easy to conduct non-disruptive tests and shorten cutover windows while you move the network identity to the cloud “computer”.

“AWS MGN reduces overall migration costs with no need to invest in multiple migration solutions, specialized cloud development, or application-specific skills because it can be used to lift and shift any application from any source infrastructure that runs supported operating systems (OS)” explains Venter.

Here’s an example of how the process works:

Implementation begins by installing the AWS Replication Agent on your source servers. Once it’s installed, you can view and define the replication settings. AWS MGN uses these settings to create and manage a Staging Area Subnet with lightweight Amazon EC2 instances that act as Replication Servers and as low-cost staging Amazon EBS volumes.

These Replication Servers receive data from the agent running on your source servers and write this data to the staging Amazon EBS volumes. Your replicated data is compressed and encrypted in transit and can be encrypted at rest using EBS encryption. AWS MGN keeps your source servers up to date on AWS using continuous, block-level data replication. It uses your defined launch settings to launch instances when you conduct non-disruptive tests or perform a cutover. When you launch test or cutover instances, the service automatically converts your source servers to boot and run natively. After confirming that your launched instances are operating properly, you can decommission your source servers. You can then choose to modernise your applications by leveraging additional services and capabilities. “In a nutshell, utilising components such as the AWS Application Migration Service during your migration means that you can keep your operations running while simultaneously moving them to the cloud – it’s a great trick for ensuring a smooth transition to the cloud.”

He adds that AWS MGN is also a great option for lift and shift projects, but with these you might be modernising your application and therefore can’t just simply replicate and decommission on the one side and switch on the new environment without any interventions.

That’s where services such as Route 53 come in.

Amazon Route 53 is a highly available and scalable cloud Domain Name System (DNS) web service. It is designed to give software engineers and businesses an extremely reliable and cost-effective way to route end users to internet applications by translating names like www.example.com into the numeric IP addresses such as 192.0.2.1. Why does this need to happen? DNS web services are important because they are what computers use to connect to each other.

Using Route 53’s DNS routing function means you can manage what environment your users will connect to – a handy tip if you’re wanting to make sure that your end users have no disruption or break in what they’re viewing through your migration. At the point where you have completed your internal testing and are ready to switch over to your new cloud environment, DNS routing allows you to redirect traffic so that your users seamlessly connect to the new environment. During this process your users won’t be impacted and are able to simply continue operating as if nothing changed.

In the event that something does not quite go as planned (“And it can happen!” remarks Venter), you can redirect traffic back to your previous environment, allowing you to address any challenges then switch back to the new clous setup once again.

A migration plan is all about mitigating risk and ensuring there are clearly defined metrics of what “good” looks like. It’s also about knowing at what point you should deem the project a success and when to roll back – “What’s that old adage? Failing to plan is planning to fail”.

One last tip: Make it easy to roll back if you have to.

If you’re in need of a cloud partner to guide you through the tools, compliancy, strategies, operational changes and downtime dependencies – chat to us.